How much of your development budget is disappearing into custom AI integrations that should be straightforward? Or have you perhaps not started building your own AI integrations?

OpenAI's latest Responses API updates directly target this resource drain, providing the infrastructure that transforms AI agents from expensive proof-of-concept demos into production-ready business tools that integrate with your existing systems.

The challenge has never been AI capability, it's been practical implementation. Complex orchestration logic, fragmented tool integrations, and enterprise security requirements have kept most AI agent projects in experimental phases. The updated Responses API eliminates these technical barriers while introducing capabilities that make AI agents genuinely useful for business operations.

Technical Architecture That Solves Real Problems

You know the pain points: development teams spend weeks building custom API integrations, managing conversation state becomes complex at scale, and tool orchestration requires significant engineering overhead. The Responses API architecture directly addresses these operational challenges.

Think of it like this: the old Chat Completions API had no memory, developers had to remind it of the entire conversation every single time. The Responses API remembers previous interactions automatically, so conversations can pick up exactly where they left off.

Direct Impact on Your Development Operations:

- Reduced Engineering Overhead: Built-in state management eliminates custom conversation tracking

- Simplified Tool Integration: Native tools work without external vendor complexity

- Faster Development Cycles: Unified API reduces integration complexity and testing requirements

- Scalable Architecture: Supports enterprise-grade deployment without performance degradation

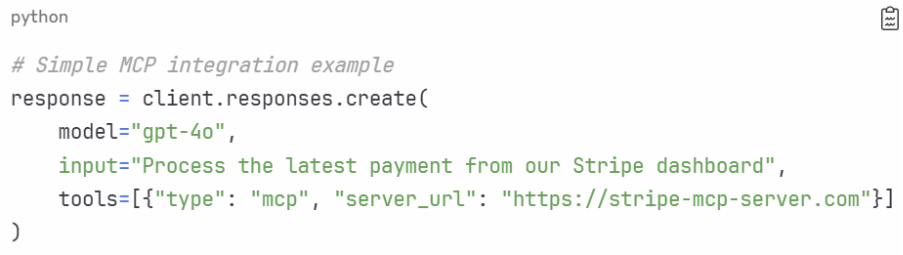

Revolutionary Tool Integration: MCP Changes Everything

The introduction of Model Context Protocol (MCP) support transforms how AI agents connect to external systems. MCP functions like "USB-C for AI applications", providing a standardised interface that replaces fragmented, one-off integrations with a unified protocol.

The MCP Advantage

With traditional function calling, every action requires multiple network hops: model → backend → external service → backend → model. MCP eliminates this complexity by enabling direct model-to-server communication, reducing latency and simplifying orchestration.

Real-World Impact

Developers can now connect OpenAI models to platforms like Stripe, Shopify, Twilio, and Zapier with just a few lines of code. The protocol standardizes tool exposure, enabling agents to interact with any MCP-compatible server without custom implementation for each service.

OpenAI has joined the MCP steering committee, signalling their commitment to this open standard and ensuring ecosystem growth. The expected rapid expansion of remote MCP servers will create unprecedented connectivity between AI agents and existing business tools.

Native Tool Capabilities: Beyond Traditional Limitations

The updated Responses API introduces powerful built-in tools that expand agent capabilities dramatically.

Image Generation Integration

OpenAI's latest image generation model, gpt-image-1, is now available as a native tool within the Responses API. This integration supports:

- Real-time streaming: Developers see image previews during generation

- Multi-turn refinement: Agents can granularly edit and improve images step-by-step

- Dynamic creation: Images can be generated and modified based on conversation context

Code Interpreter Enhancement

The Code Interpreter tool enables agents to handle complex data analysis, mathematical computations, and logic-based tasks within their reasoning processes. This tool significantly improves performance on technical benchmarks and enables sophisticated analytical capabilities.

Advanced File Search

Enhanced file search capabilities include metadata filtering and direct search endpoint access, enabling efficient information retrieval from large document sets. This addresses enterprise needs for knowledge management and document analysis at scale.

Reasoning Model Breakthrough: o3 and o4-mini Tool Calling

Perhaps the most significant advancement is enabling o3 and o4-mini reasoning models to call tools and functions directly within their chain-of-thought processes. This capability produces more contextually rich and relevant responses while preserving reasoning tokens across requests.

Performance Implications

- Improved Intelligence: Tools are integrated into the reasoning process rather than external calls

- Reduced Costs: Reasoning tokens are preserved across tool interactions

- Lower Latency: Direct integration eliminates API round-trips

Enterprise-Grade Features: Reliability and Security

OpenAI has introduced several enterprise-focused capabilities that address production deployment concerns.

Background Mode

Handles long-running tasks in the background, improving reliability for complex agent workflows that require extended processing time.

Reasoning Summaries

Provides insights into reasoning processes while maintaining efficiency, enabling better debugging and optimisation.

Encrypted Reasoning Items

Ensures sensitive reasoning processes remain secure, addressing enterprise security requirements for confidential business logic.

Strategic Implementation for Technology Leaders

The Responses API represents more than an API upgrade, it's a platform consolidation that affects your technology stack decisions and resource allocation.

Team Productivity Impact

Your developers can now build sophisticated AI agents without the engineering overhead that previously made these projects resource-intensive. The unified API structure means faster prototyping, shorter development cycles, and more predictable project timelines.

Infrastructure Considerations

With the Assistants API scheduled to be retired by mid-2026, you have a clear migration timeline. This isn't urgent, but planning now prevents future technical debt and ensures your AI investments remain current.

Cost Structure Changes

The integrated tool approach can reduce operational complexity and associated infrastructure costs. However, evaluate pricing implications for image generation and specialized tool usage against your current AI spending.

Why This Matters for Technology Strategy

OpenAI's Responses API updates represent a fundamental shift toward practical, production-ready AI agents. For technology leaders, this means the gap between impressive demos and useful business applications is finally closing.

Critical Strategic Considerations

- Platform Consolidation: OpenAI is building a comprehensive development ecosystem, not just adding features

- Industry Standards: MCP adoption positions your technology stack within emerging industry standards

- Production Readiness: Enterprise features demonstrate these tools are designed for real business deployment, not experimentation

The technical capabilities are available now, and the ecosystem is expanding rapidly. The strategic question isn't whether AI agents will transform operations—it's whether your organisation has the right implementation approach to capitalise on these capabilities.

What This Means for Your AI Strategy

These aren't just API updates, they're the building blocks for the next generation of business automation. OpenAI has essentially solved the three biggest barriers to practical AI agent deployment: complex integrations, unreliable tool access, and enterprise security concerns.

The strategic advantage goes to organisations that recognise this inflection point. While competitors struggle with fragmented AI implementations, forward-thinking companies can now build sophisticated agent workflows that integrate with their existing business systems.

The Window Is Open, But It Won't Stay That Way

The current moment presents a unique opportunity. The tools are production-ready, the ecosystem is expanding rapidly, and most organisations haven't yet realised what's possible.

The difference between successful AI agent implementation and expensive experimentation comes down to one factor: understanding what these tools can actually accomplish within your specific business context.

Most organisations approach AI agent development backwards, starting with the technology and hoping to find applications. The strategic approach begins with your operational challenges and maps them to the right combination of these new capabilities.

Ready to build AI agents that actually work?

At Adaca, our AI Pods combine these cutting-edge OpenAI capabilities with proven development expertise to deliver AI agents that integrate seamlessly with your existing systems. Whether you're looking to automate complex workflows, enhance customer experiences, or unlock insights trapped in your data, we help you navigate from possibility to profitable reality.

The window for competitive advantage is open now. Let's explore what's possible for your business.